Lighthouse. A tool to guide us to a better web?

Update

Since first writing this post I have built a small tool that helps in running a full analysis on a given website. It is available at https://github.com/b-m-f/fafu.

=============================================

Large parts of the web are broken. Most of this is not directly noticeable, since our machines have become so extremely fast, but I am convinced that we have a lot of room for improvement.

Take for example any old (2010ish) device you have lying around and try to browse the web with it for a day. Chances are high that some sites will be near unusable. As a web developer this is not a state I am comfortable in, so I am always keen on tech that makes the maintenance of websites easier for everyone.

One such tool is Lighthouse. It is supposed to help us make this task easier, which I want to put to a test.

The goal is to improve my theme for Ghost, that enables content to reach as many people as possible. This should be done in an extremely efficient manner, being conscious of the waste that bad engineering can entail and good engineering can prevent.

The Internet’s data centers alone may already have the same CO2 footprint as global air travel.

https://internethealthreport.org/2018/the-internet-uses-more-electricity-than/

Since this is a systematic problem, I want to share my insights and also document them for myself as a reference.

So the first thing to do is to get a 100 point performance rating in the Lighthouse tools.

Lighthouse

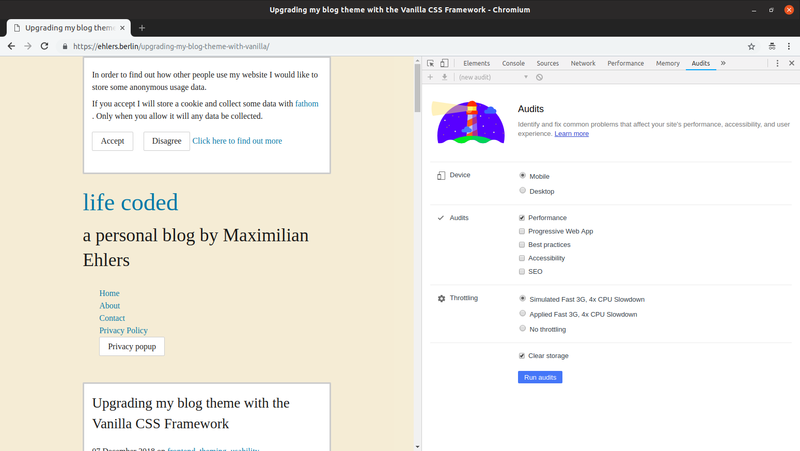

Being available only in Chromium browsers, I first had to install one and then go into the developer tools to start the first test.

The site that I choose for the first round of tests is https://ehlers.berlin/upgrading-my-blog-theme-with-vanilla/. The reason being the more complex HTML structure and inclusion of images, as compared to f.e. the Homepage.

Here are both the settings and the result

91, that is already a really high score, but lets drive it up.

The first thing that is apparent is the very fast time to both First Contentful Paint, and First Meaningful Paint, which are identical at 0.8 seconds, but the slow Time to Interactive.

While this could have many reasons, such a JavaScript executing in the background, I will go and fix the suggested things first and then come back to revisit other theories. Get the low hanging fruits first.

This way I can also rule out some things in case things get complicated.

Images

Ghost, in newer versions, supports dynamic image resizing, a feature which allows to fetch smaller image sizes for any given image via changing the request URL.

Unfortunately though, this is not enabled by default when retrieving the post content for the theme, so a JavaScript solution is needed to leverage this.

Here is the solution I went with until the feature will ship directly in Ghost:

post.hbs

{{#post}}

<section class="post__content">

<noscript>

{{content}}

</noscript>

</section>

<script src="{{asset "built/post-content.js"}}"></script>

{{/post}}

post-content.js

function generateResponsiveImage(url) {

const urlSplit = url.split("content/images");

const start = "/content/images/size/";

const end = urlSplit[1];

return `srcset="${start}w100${end} 100w,

${start}w300${end} 300w,

${start}w600${end} 600w,

${start}w1000${end} 1000w"

sizes="(max-width: 1000px) 400px, 700px"

src="${start}600w${end}"

`;

}

function improvePostPerformance() {

const contentSection = document.querySelector(".post__content");

let content = contentSection.querySelector("noscript").innerHTML;

const images = content.match(/<figure.+?figure>/g) || [];

const updatedImages = images.map(image => {

const src = /src="([^"]*)/.exec(image)[1];

if (src.split("/")[1] === "content") {

return image.replace(/src="[^"]*"/, generateResponsiveImage(src));

} else {

return image;

}

});

images.forEach((image, index) => {

content = content.replace(image, updatedImages[index]);

});

contentSection.innerHTML = content;

}

improvePostPerformance();

The idea is to provide the normal output for any browsers that have JavaScript disabled and to enhance all image output with srcset and sizes attributes on devices that have JavaScript active.

While native Ghost support would obviously be the best solution (the core team is also having this in mind, but needs to consider some technical difficulties), this approach is still working very well as it has

- responsive images on post content

- a fallback for browsers without JavaScript

- a small data footprints the JavaScript version loads all content from the `

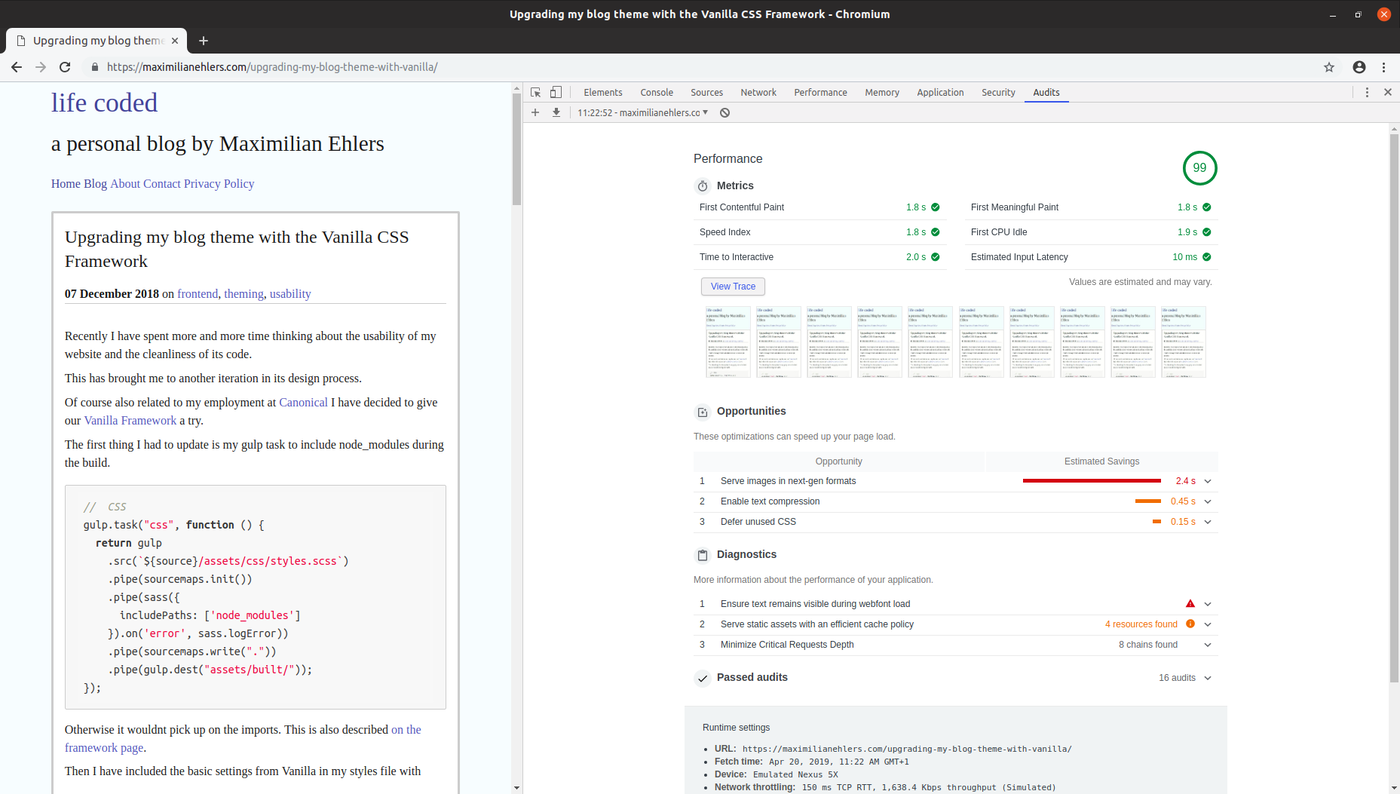

Running lighthouse again now results in the following:

99, wow. That is a great performance boost of 8 points.

The only thing that stands out here now is the idea of serving images using new picture formats, such a JPEG2000.

This is a good idea when you have full control over the image service, as pictures could automatically be converted to modern formats when they are being uploaded. This is something that Ghost does not yet support and the only solution here is to manually convert the images and then add those additional sources to the images via JavaScript, leveraging the

Converting the pictures

Using imagemagick and a simple for loop in bash the images are quickly converted to a new format:

for i in *.png ; do convert "$i" "${i%.*}.jp2" ; done

for i in *.jpg ; do convert "$i" "${i%.*}.jp2" ; done

for i in *.jpeg ; do convert "$i" "${i%.*}.jp2" ; done

I ran this directly on the content of my Ghost development instance.

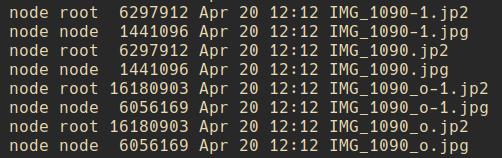

Image sizes increasing after conversion to JPEG2000

Weirdly though the images seemed to increase in size, so there must be a configuration setting I am missing.

In addition Ghost has no support for these image types:

Newer image types are not yet supported

So, naturally the dynamic sizing, for responsive images also will not work:

Sizing does not work with JPEG2000

Instead I just provided a normal src attribute for JPEG2000 and ran the lighthouse test:

A 100 score

100, here it is beside the website now being technically degraded a bit.

Conclusion

My theme is fast, no doubt. But there is still some way for improvement.

Due to lack of image format support in Ghost I immediately removed the Proof of concept implementation for modern formats from the theme again. I will keep it around on a branch for the future.

The 1 point on the performance scale does not outweigh the actual realities and integrations that we face in the wild today, but can surely give a good indicator for possible improvements.

That is the general idea that I am getting of lighthouse as well.

It is a great tool and finally some good news from Google.

It is not perfect, but can give some great feedback to pick low hanging fruits.

Some suggestions might not fit every setup though, and thus lead to confusion.

Inline CSS for example does not make sense for me, since all pages have the same styles and caching will kick in immediately.

So, 99 points is good enough for me and I am looking forward to new updates to Ghost. As far as simplicity and User-/Developer Experience go it is my favourite CMS and already ahead of Wordpress in a few things. Even though I raised some negative aspects, I support it and if the team makes contributions easier I might be able to help out on these problems.

Okay, but what about the sustainability problems?

Consider the data that will get saved on the pictures by caching different sizes. A website with more traffic will accumulate quite a wasteful footprint, when it misses things like this. The web has all different kind of reputations, some of them deserved. It is our developer responsibility to have the due diligence of keeping it healthy and efficient. With tools like lighthouse and a Jiroesque attitude we can save it.

Do you think I have missed something? Misrepresented something or did a great job? Let me know in the comments.

Next up on the Frontend side will be an accessibility assessment of my page, and you guessed it. I am going to shoot for a score of 100.

Notes

If you look closely at the screenshots you notice that I have changed the domain and styling while writing the post, other than that the theme and content where still the same.